Making Of Artificial Brain!

So in my last blog, I explained what makes AI different from any other simple device. Now you may ask “But how are they made?”

To answer this, we have to go deeper into a Machine Learning process, called “Training”.

In this process we feed data into our model, and model uses this data according to its algorithm.

Want to know training process only? click.

to skip directly to the part where we will see training of NN directly, but its suggested to read full for better understanding.

But as usual, for my non techie readers, this blog is going to be a smooth ride!

So…

Welcome to Aviral’s Code Blog where Hard concepts meet easy explanations…😉

So in the starting I used a term “Training”.

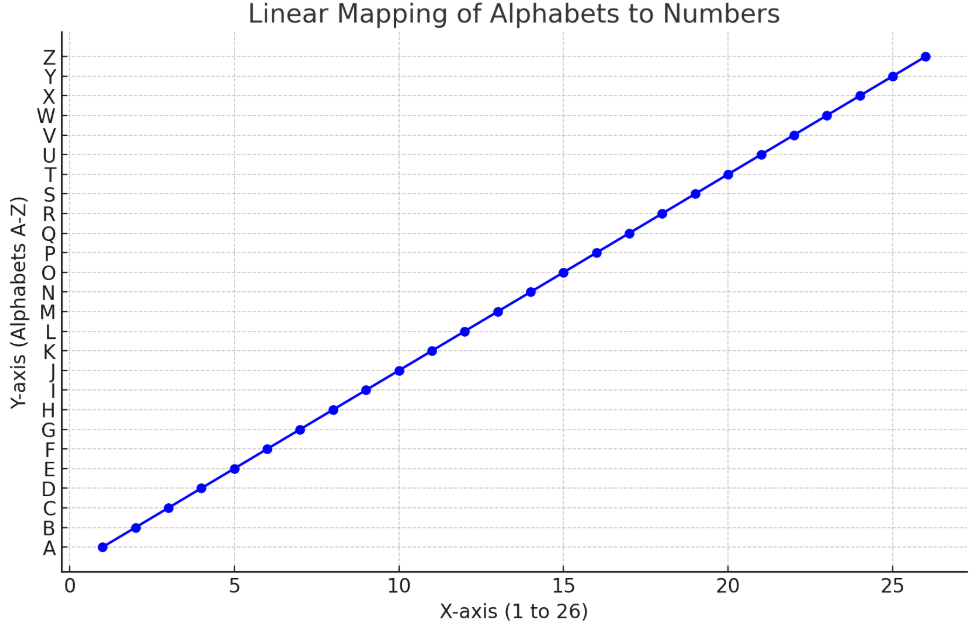

For a more relatable example: If I say, A = 1, B = 2, C = 3… all the way to Y = 25, and then ask, What’s the value of Z? You’d immediately think, “26!”

Similarly, we have an algorithm in ML, Linear Regression, as the name suggests

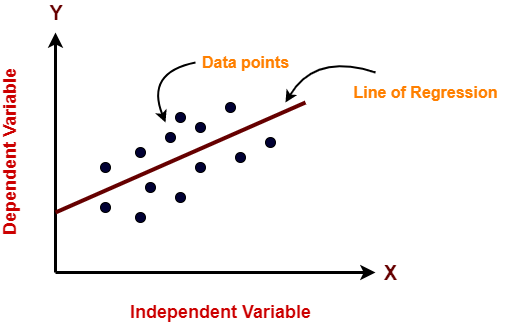

So what it is? It works on the similar processes like you thought in the example of ABCD above. here is what it looks like:

In this model we give values and label corresponding to each value.

In the graph you can see Dependent variable and Independent variable, which are Label and Value respectively. (Label depends on Value)

So it plots a graph using data points and make a line of regression according to it.

To make it more clear, here is the graph of approach you took in ABCD example:

Using this your LR model can predict value of any Alphabet even some in between are missing! (but in reality data points are not aligned so much in a line, they scatter around, and the task is to find best line of regression, which can predict other Labels based on value most precisely!)

So the training process involves feeding data in form of Labels and Values. Then using the algorithm (Mathematical functions written in form of code) model tries to find out patterns on the given data and TADA!

Give input and get output in accordance to the data given.

This is the most simple form of ML model which is used for simple predictions. But when we talk about Neural Network things are not simple and even not that boring!

If you know how neural networks work, you will really enjoy how they are trained.

Before we dive in more, do you know? Neural Networks are behind the AI being capable of tasks like recognizing faces, translating languages, or even playing chess like a pro!

From the last blog we know the ingredients (Neurons, Layers and algorithms), what they are and also exactly how they work.

So now next part is to train our Cat or not prediction model! Can you recall? 🙀

Neural Networks learn through a process called Backpropagation 😮💨

that’s it!

Don’t worry, here’s a simple comparison:

Think about teaching a child to play basketball.

You start by explaining the rules and showing them how to dribble. As they practice, they might miss some shots. You help them understand what went wrong, and they change their technique.

Neural Networks learn in a similar way:

- Feeding Data: You give them labeled data, like pictures of cats marked as “cat”.

- Calculating Errors: They compare what they predicted with the actual label to see how close they were. (they predict using mathematical algorithms)

- Adjusting Weights: They use Backpropagation to adjust the weights and biases, improving their predictions with each try.

Wait! weights and biases?

- Weights decide how much importance to give to each input (like age, height, or temperature) when making a decision.

- Bias adjusts the result to make the final prediction more accurate, even if the inputs are perfect.

So, just like in cooking, weights and bias help AI “mix” the ingredients (data) to get the best “dish!” (prediction or result). 😊

Weighs like importance of each ingredient, how much of each ingredient should be added, and Bias, something you do to adjust the taste, like if somehow your dish tastes extra salty, no matter how much you try, it happens every time, so after some trials, you automatically add 20ml water to maintain the taste! (idk in real life its sufficient or not 😅)

So as the name suggests, Backpropagation;

Click here to know meaning of Propagation and about its backwards form.

Propagation: Think of it as “sending something forward or backward.” In this case, it’s about sending information through the layers of a neural network.

Backwards: The process works backwards, starting from the output (the final result) and moving to the earlier layers (closer to the input).

Leave a comment